Learning to Improve at United Schools Network

Note: Here’s part ten in our Learning to Improve series which is the primary focus of the School Performance Institute (SPI) blog this year. In it, we spotlight issues related to building the capacity to do improvement science in schools while working on an important problem of practice.

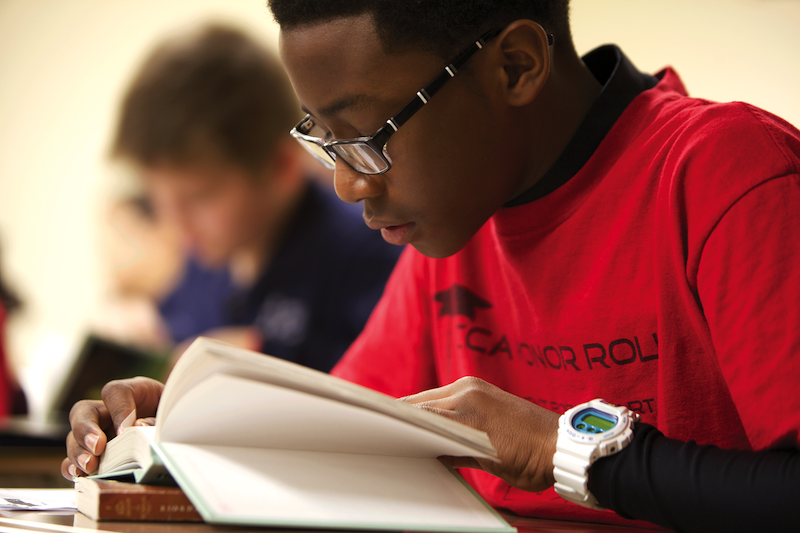

James’ reading grade dropped from a B in 7th grade to a D during the first trimester of his 8th grade year.¹ The rest of his grades were a C or higher, his attendance rate was above 96%, and he had never been in serious trouble. Most people would look at James’ academic, attendance, and behavior stats and not see a student that is in need of intervention. We disagree. At the very moment that his reading grade dropped, James was in need of extra support. Off-track 8th graders become off-track 9th graders, and this is especially problematic because freshman year is the make-or-break year for high school graduation.² In most schools, a student like James wouldn’t likely get much attention. But research suggests that his grade drop is a leading indicator of things to come. Grades tend to drop in high school, compared to middle school, by about half a GPA point, and students receiving Ds in the middle grades are likely to receive Fs in high school.³ When viewed through this lens, there is urgency to get James back on-track.

At United Schools Network (USN), the picture of James’ educational trajectory began to emerge as we learned of the on-track indicator systems being developed by organizations such as the University of Chicago Consortium on School Research and CORE Districts in California. One such system developed by CORE Districts in Oakland, predicted the 8th graders who went on to graduate from high school with 96% accuracy.

This learning led to the launch of our 8th Grade On-Track project at Columbus Collegiate Academy-Main St., one of the four schools that make up USN, during the 2018-2019 school year. By adopting CORE’s 8th grade on-track indicator system at the onset of the project, we knew that we had a strong definition of what it means to be on-track for high school graduation. While that certainly isn’t the end goal for our students, it’s a necessary next step to having college and career options. In order to be considered on-track, students need to meet all of the indicators outlined in the chart below.

However, even though we now had a definition of on-track for high school readiness, we didn’t initially have an answer for an important question. How do we get off-track students like James back on-track?

Improvement Science

As it would happen, the discovery of the on-track indicator research, coincided with a multi-year study of improvement science at School Performance Institute (SPI), the learning and improvement arm of USN. Originally devised in the healthcare sector at the Institute for Healthcare Improvement (IHI), the Carnegie Foundation for the Advancement of Teaching has played a leading role in bringing this science to the field of education. As defined by IHI, improvement science “is an applied science that emphasizes innovation, rapid-cycle testing in the field, and spread in order to generate learning about what changes, in which contexts, produce improvements. It is characterized by the combination of expert subject knowledge with improvement methods and tools. It is multidisciplinary — drawing on clinical science, systems theory, psychology, statistics, and other fields.”⁴

Improvement science as a methodology represents a significant shift in our field. We believe that the education sector has a serious learning-to-improve problem. Too often in education we go fast, learn slow, and fail to appreciate what it actually takes to make promising ideas work in practice. At the same time, student outcomes, especially for students of color and students living in poverty, are far from where we aspire for them to be. While the best of Ohio’s and the U.S.’s educational system achieves remarkable results, we have a persistent and disturbing number of young adults who struggle to achieve at even basic levels.

We’ve ascribed a narrative to this failure that often includes blaming school leaders and teachers. While there are certainly educators that shouldn’t be leading classrooms, schools, and districts, the evidence from more than a half century of improvement efforts across numerous industries suggests something quite different. Improving results in complex systems is not primarily about individual competence, but rather it is about designing better processes for carrying out common work problems.⁵

Education reform is littered with big ideas that were rolled out fast and big only to be abandoned a few years later. Left in the never-ending wake of good intentions are teachers with initiative fatigue. And, the worst part is that within many of those ideas was a kernel of promise. Too often though, the implementation plan was underspecified, and the people on the ground didn’t have the tools and processes necessary to make them work.

As we introduced improvement science to our network, the SPI team emphasized three key ways that the methodology differs from typical reform efforts. First, this science involves going on an improvement journey. It’s not a one-time workshop or a top-down change mandate. Instead, it’s an effort to increase the capacity of an organization to produce successful outcomes reliably for different groups of students, being educated by different teachers, and in varied contexts. Second, a core ethos of improvement science is that our ideas are probably wrong, definitely incomplete. We first saw this phrase used during an improvement summit hosted at the Carnegie Foundation for the Advancement of Teaching. Improvement science cautions for humility when considering how much must be learned in order to get a change idea to work in practice reliably.

And third, one of the counterintuitive ideas of improvement science is that while the goal is to improve outcomes for all students, the work often starts with a single teacher, student, or classroom. USN’s 8th Grade On-Track project started by focusing on individual off-track students in two classrooms at Columbus Collegiate Academy. Starting small allows teams to manage the complexity inherent in educational improvement work. Only after change ideas are tested and data verify their effectiveness under a variety of conditions are they considered for spread to other students, classrooms, grade levels, and schools.

School-Based Improvement Teams & PDSA Cycles

All attempts to improve schools are social and human resource intensive activities. The critical question for these activities is not “What works?” but instead “What works, for whom, and under what set of conditions?” We believe that School-Based Improvement Teams working with an improvement advisor are in the best position to answer these questions. When deliberately assembled and trained in improvement science methodology, these teams can function as the engines that drive improvement work within schools. School-Based Improvement Teams are largely made up of teachers and other school-based staff who serve the students on which the improvement work is focused. They are asked to be researchers who engage in rapid cycles of learning in which change ideas are tested under the conditions and with the students that they would ultimately be used.

If School-Based Improvement Teams are the engines that drive improvement, Plan-Do-Study-Act (PDSA) cycles are the fuel that make those engines go. Each PDSA cycle is a mini-experiment designed to test a change idea. Observed outcomes are compared to predictions and the differences between the two become the learning that drives decisions about next steps with the intervention. The know-how generated through each successive PDSA cycle ultimately becomes the practice-based evidence that demonstrates that some process, tool, or modified staff role or relationship works effectively under a variety of conditions and that quality outcomes will reliably ensue.

Support Hubs & Improvement Networks

Improvement science happens locally through the work of School-Based Improvement Teams, but there is also a growing movement across the United States to create networks of these teams who are working on a shared problem. At the center of these improvement networks is a support hub that coordinates this work. Thought leaders in this space have given these networks different names and while there are degrees of difference between each, defining features include site-based teams working with a central hub to improve important student outcomes. Carnegie calls these intentionally designed social organizations Networked Improvement Communities. IHI calls these networks Collaboratives. The Gates Foundation is funding this work through their Networks for School Improvement initiative with a centrally located support hub Gates calls an intermediary.

Like professional learning communities, participants in improvement networks come together to learn alongside like-minded professionals. However, unlike PLCs, a defining feature of improvement networks is a shared aim. At their core, these structured networks are working to identify solutions to local problems and using data to drive continuous improvement. The support hubs play a critical role in this work as both improvement science advisor and project manager.

More on How We’re Learning to Improve

With funding support from the Martha Holden Jennings Foundation, School Performance Institute is partnering with Columbus Collegiate Academy to improve its high school readiness rates using improvement science methodology. SPI serves as both the improvement advisor and project manager for School-Based Improvement Teams working to improve student outcomes. Through an intensive study of improvement science as well as through leading improvement science projects at USN schools, we’ve gained significant experience with its tools and techniques. If you are interested in learning more about our improvement science work, please email us at spi@unitedschoolsnetwork.org.

We’re also opening our doors to share our improvement practices through our unique Study the Network workshops that take place throughout the school year. The workshop calendar for the 2019-2020 school year will be shared later this summer.

John A. Dues is the Director of School Performance Institute and the Chief Learning Officer for United Schools Network. The School Performance Institute is the learning and improvement arm of United Schools Network, an education nonprofit in Columbus, Ohio. Send feedback to jdues@unitedschoolsnetwork.org.

¹James is a pseudonym.

²Phillips, Emily Krone. The Make or Break Year: Solving the Dropout Crisis One Ninth Grader at a Time. The New Press. New York and London, 2019.

³Rosenkranz, T., de la Torre, M., Stevens, W.D., and Allensworth, E. (2014). Free to Fail or On-Track To College: Why Grades Drop When Students Enter High School and What Adults Can Do About It. Chicago, IL: University of Chicago Consortium on Chicago School Research.

⁴Retrieved from http://www.ihi.org/about/Pages/ScienceofImprovement.aspx on May 9, 2019.

⁵Bryk, Anthony; Gomez, Louis M.; Grunow, Alicia; LeMahieu, Paul G. Learning to Improve: How America’s Schools Can Get Better at Getting Better. Harvard Education Press. Cambridge, MA, 2015.